Artificial Intelligence (AI) isn’t just a futuristic buzzword it’s already here, woven into our daily lives. From voice assistants that wake us up to algorithms suggesting our next binge-worthy series, AI has become a silent partner in modern living. But with every leap forward, we face a critical question: How do we balance innovation with ethics? This is the fine line AI must walk.

The Rise of AI in Everyday Life

Take a moment to look around AI is everywhere. Your smartphone predicts your typing, your car might park itself, and online shops know what you “want” before you even do. Companies like Digicleft Solution leverage AI to improve customer experiences, optimize marketing, and streamline operations. This accessibility of AI makes innovation exciting but also more complex when ethics are involved.

Innovation in AI: The Double-Edged Sword

AI has brought jaw-dropping improvements. Self-driving cars promise safer roads, while AI doctors detect conditions faster than humans. But let’s be real when innovation sprints ahead of regulation, things can get messy. Without checks, AI could amplify inequality, invade privacy, or even make life-altering mistakes.

Understanding AI Ethics

So, what does “AI ethics” really mean? At its core, it’s about ensuring AI aligns with human values. Ethical AI follows three golden rules:

- Fairness – AI should treat everyone equally, free of bias.

- Responsibility – Someone must be accountable for AI’s actions.

- Transparency – Users deserve to know how AI decisions are made.

Sounds simple, right? But putting it into practice is far from easy.

The Fine Line: Innovation vs. Ethics

Here’s the innovation thrives on freedom, but ethics demands responsibility. Think of it like driving a sports car: speed excites us, but without brakes (ethics), the crash is inevitable. Real-world dilemmas, like facial recognition misidentifying minorities, show how innovation without ethics can cause harm.

Data Privacy Concerns

AI runs on data. The more data, the smarter the system. But at what cost? When companies collect everything from browsing history to voice recordings, the line between personalization and intrusion blurs. Imagine an AI knowing more about you than your closest friend. That’s not just creepy it’s a red flag for privacy.

Bias in AI Algorithms

Here’s the uncomfortable truth: AI reflects the data it’s fed. If the data is biased, the AI will be too. Cases of hiring algorithms favoring men or predictive tools unfairly targeting minorities have proven this. It’s like teaching a child the wrong values they grow up repeating them.

Job Displacement and Economic Shifts

AI doesn’t just change technology; it reshapes economies. Yes, it creates new roles, but it also displaces traditional jobs. Imagine factories run mostly by machines or customer service completely replaced by bots. The ethical question is: do we leave workers behind, or do we reskill them for the future?

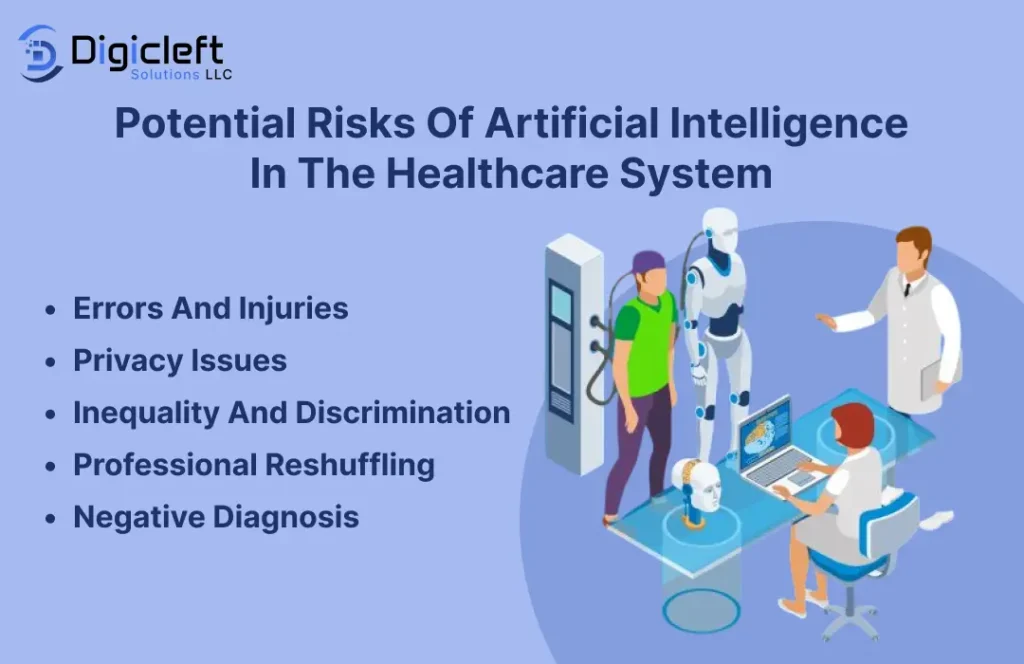

AI in Healthcare: A Blessing or a Risk?

AI in healthcare has saved lives by detecting conditions early, predicting outbreaks, and personalizing treatments. But over-reliance is dangerous. Would you trust a machine to decide your treatment? What happens if it makes a mistake? These questions highlight the fine line between innovation and patient safety.

AI and Law Enforcement

Predictive policing sounds innovative stopping crimes before they happen. But in practice, it risks turning into mass surveillance. Misused, it could criminalize innocent communities based on biased data. Innovation here must be tightly bound by ethics.

Corporate Responsibility in AI

Companies are the driving force behind AI. That means they carry the responsibility to ensure their tools don’t harm society. Forward-thinking businesses like Digicleft Solution can set examples by building ethical frameworks, testing for bias, and promoting transparency.

Government Regulations and Policies

Governments worldwide are racing to create AI laws. Europe’s AI Act, for instance, categorizes AI risks and sets clear rules. Without regulation, innovation can run wild but too much regulation can stifle creativity. Striking that balance is the ultimate challenge.

The Role of Education in Ethical AI

Tech innovators need more than coding skills they need ethical awareness. Schools and universities must teach future creators to think about AI’s societal impact. And it’s not just the experts; the public deserves to understand AI’s risks and rewards too.

Future of AI: Utopia or Dystopia?

The road ahead splits in two. On one side, AI helps humanity flourish curing diseases, tackling climate change, making life easier. On the other, unchecked AI deepens inequality, erodes privacy, and risks control slipping from human hands. The choice depends on whether we keep ethics at the heart of innovation.

Conclusion

Balancing innovation and ethics in AI isn’t optional it’s essential. The fine line we walk determines whether AI becomes our greatest ally or our worst nightmare. With companies, governments, and individuals working together, AI can truly serve humanity. And maybe that’s the real innovation not in the machines we build, but in the ethical responsibility we uphold.

FAQs

1. Why is AI ethics important?

AI ethics ensures technology serves humans fairly, transparently, and responsibly without causing unintended harm.

2. Can innovation and ethics in AI truly coexist?

Yes, but it requires conscious effort. Clear frameworks and accountability make it possible.

3. What role does data privacy play in AI ethics?

Data privacy protects individuals from misuse, ensuring AI respects personal boundaries.

4. How can companies like Digicleft Solution adopt ethical AI?

By testing for bias, being transparent about algorithms, and prioritizing user trust over profit alone.

5. What’s the future of AI if ethics are ignored?

Unregulated AI could worsen inequality, invade privacy, and create societal risks, pushing us closer to dystopia.